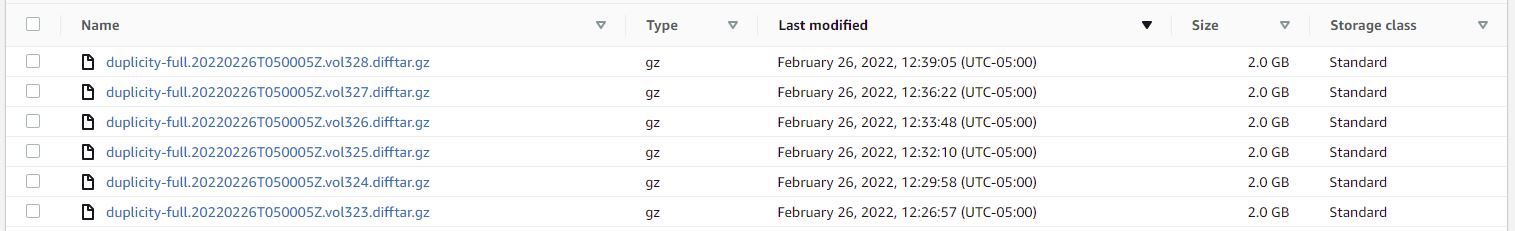

Meanwhile, upon using boto3+s3 protocol, my 1 TB data got uploaded in 1/2 the time it took for s3+http protocol. But here's the problem: if I add the sizes from AWS s3 console (screenshot below):

it is way bigger than 1 TB. What is more wired is that I see the number of objects increasing even after duply backup task finished at the backend:

--------------[ Backup Statistics ]--------------

StartTime 1645828858.98 (Fri Feb 25 17:40:58 2022)

EndTime 1645895278.36 (Sat Feb 26 12:07:58 2022)

ElapsedTime 66419.38 (18 hours 26 minutes 59.38 seconds)

SourceFiles 307805

SourceFileSize 1135560226054 (1.03 TB)

NewFiles 307805

NewFileSize 1135560226054 (1.03 TB)

DeletedFiles 0

ChangedFiles 0

ChangedFileSize 0 (0 bytes)

ChangedDeltaSize 0 (0 bytes)

DeltaEntries 307805

RawDeltaSize 1135493620993 (1.03 TB)

TotalDestinationSizeChange 1069767354158 (996 GB)

Errors 0

-------------------------------------------------

StartTime 1645828858.98 (Fri Feb 25 17:40:58 2022)

EndTime 1645895278.36 (Sat Feb 26 12:07:58 2022)

ElapsedTime 66419.38 (18 hours 26 minutes 59.38 seconds)

SourceFiles 307805

SourceFileSize 1135560226054 (1.03 TB)

NewFiles 307805

NewFileSize 1135560226054 (1.03 TB)

DeletedFiles 0

ChangedFiles 0

ChangedFileSize 0 (0 bytes)

ChangedDeltaSize 0 (0 bytes)

DeltaEntries 307805

RawDeltaSize 1135493620993 (1.03 TB)

TotalDestinationSizeChange 1069767354158 (996 GB)

Errors 0

-------------------------------------------------

But the object number (511)+total size (1.03 TB) was accurate for boto protocol. Do you know anything about this discrepancy? Is multipart upload algorithm causing it?

Best,

Tashrif

Tashrif